AI is no longer a ‘nice to have’, but an important and necessary weapon in the fight against money laundering, terrorist financing and other financial crimes. One of the biggest factors driving the demand of AI for AML is the fact that criminals themselves are operating using sophisticated technology.

But it goes without saying that change and investment carries risks and challenges.

My aim here is to give you an insight into those associated with the implementation of machine learning for the detection and prevention of money laundering.

Before I dive in, I think it’s worthwhile defining a few key terms and clarifying why AI has become so popular.

What is AI?

The FCA defines AI as the theory and development of computer systems that are able to perform tasks which previously required human intelligence.

Machine learning is a subset of AI that provides systems with the ability to automatically learn and improve from data, without the need for explicit programming. Computer programs access data and use it to learn for themselves, rather than following predetermined rules.

Two thirds of financial institutions are already using machine learning.

AI and machine learning are often used as interchangeable terms. However AI comprises additional disciplines and areas such as advanced heuristics, decision management and computer vision.

It is widely regarded that AI will transform our lives:

“AI has incalculable potential. It may be the next great disruptor in the financial services space, transforming virtually every area.”

Financier Worldwide Magazine

“Machine learning and artificial intelligence will impact our world just as profoundly as the invention of the personal computer, the internet, or the smartphone."

Hadi Partovi, founder and CEO, Code.org, cited in Human + Machine, 2018

Why is AI the go-to new technology for AML?

AI-powered AML systems enable compliance teams to cut through the noise associated with vast volumes of data and focus on high risk red flags. The computer automatically processes, monitors and analyses transactions, providing valuable insights so that compliance staff can focus on investigations and make decisions relating to flags the system has generated.

In a previous blog Napier CEO, Julian Dixon, explained three ways AI can take on money launderers more effectively: by reducing false positives, by giving compliance teams greater control, and by improving intelligence. Julian has also previously explained how machine learning can mitigate the risk of unethical business.

There are many benefits of using AI for compliance but here’s a summary of some of the biggest:

AI reduces false positives

False positives are one of the biggest thorns in the side of any compliance team. It’s certainly what makes the reduction of false positives one of the most appealing benefits of applying AI:

“One of the areas in which AML could have the biggest impact is in the number of false positives detected by traditional parameter-based transaction monitoring systems. AI could potentially reduce the number of false positives financial institutions identify, which will lower compliance costs without compromising regulatory obligations.”

Financier Worldwide

AI enables continuous monitoring

Another important benefit of machine learning is that it automates the process of looking for anomalous behaviours: machines rather than people continuously monitor and effortlessly detect patterns as they occur.

In the face of vast volumes of data, identifying complex behavioural patterns in a timely manner is a task that is now virtually impossible for humans to do, let alone do effectively.

AI enables better decision making

By continuously analysing data from multiple sources, machine learning can in turn improve its own accuracy and support better decisions, including those around new, previously unidentified scenarios.

The popular and well-regarded book Human + Machine by Paul R. Daugherty and H. James Wilson explains how by facilitating the collaboration of humans and machines, it is possible to achieve overall better decision making and continual performance improvement.

The authors highlight the following key benefits of AI:

- Helps to filter and analyse streams of data from a variety of sources

- Automates tedious, repetitive tasks, and in doing so, allows humans to be more human with greater job satisfaction

- Augments human skills and expertise

- Recognises anomalous patterns as they arise (real-time)

- Learns from experience

Ultimately, AI can help organisations keep up with sophisticated criminals. It can support compliance faced with increasingly stringent regulatory expectations, and it can help protect against a crisis of being linked to, or even facilitating, financial crime.

What do regulators say about AI?

Regulators like the FCA and PRA are technology neutral, which means they do not require or prohibit the use of particular technologies.

Regulators recognise the transformative potential of AI and machine learning. As you would expect, they also remain quietly cautious over possible limitations, including auditability and explainability.

“Criminals keep evolving to stay one step ahead using new, sophisticated technologies to get the upper hand. But we’re not sitting still either. We’re always looking for ways to help us do a better job, and we’re not afraid to use new technologies to turn the tables on criminals.”

FCA

“The FATF strongly supports responsible financial innovation that is in line with the AML/CFT requirements found in the FATF Standards, and will continue to explore the opportunities that new financial and regulatory technologies may present for improving the effective implementation of AML/CFT measures.”

FATF

A survey by the FCA and Bank of England found that financial institutions do not see regulation as an unjustified barrier to the adoption of machine learning. Some firms however feel more guidance is needed on how to interpret current regulation.

Risks and challenges in getting AI into production for AML

I’ve covered what AI is and why it is transforming compliance.

Now to cover the risks and challenges in getting AI into production for AML. The automation of compliance activities is expected to be the biggest change for compliance in the next 10 years, so it’s prudent to acknowledge hurdles you’re likely to encounter along the way.

1. AI is not a magic button that you switch on

If only there was a magic button to prevent all money laundering and terrorist financing.

Unfortunately, AI is not a panacea.

Regardless of what AI-powered AML system you use, there will be a significant amount of prep work that you’ll first need to do (more on this in point 2). And even once you reach the stage where you’re ready for full deployment, you won’t be able to hit the button and forget. In point 3 I explain more about hugely important ongoing human roles.

When you invest in AI, be prepared for results to initially take time and effort. AI is as much a cultural commitment from the top down as it is a financial investment. It is important to put the right governance and processes in place, and to clearly define target outcomes before embarking on any machine learning project.

2. Don’t underestimate the task of data preparation

Machine learning is entirely dependent on structured, semi-structured and unstructured data. With no data, there is no learning.

To get started, your new system will first need to learn from your company’s historical data. The task of preparing data for machine learning should therefore not be underestimated, especially if your data is poorly organised.

As well as internal sources (such as account activity and transactions), data will need to be pulled from external sources too (such as sanctions lists and credit ratings). This will give new insights and improve the system’s accuracy.

Data should be managed as a company-wide activity where data capturing, cleaning, integrating, curating and storing is integral to all operations. Reflecting just how important data is, research from Accenture shows approximately 90% of the time of people who train AI applications is spent preparing data and feature engineering.

For machine learning to work well, a good data supply is essential, both in terms of quality and quantity. The provider of your AI-driven AML solution should support you in achieving this.

3. Machine learning is a double-act with humans playing an important role

While machine learning does change the type of work humans do, it does not eliminate the need for humans. Paul R. Daugherty and H. James Wilson explain very clearly why humans and machines will need to work together. The machine will need to collect and process data, while humans will need to focus on analysing that data and making informed decisions.

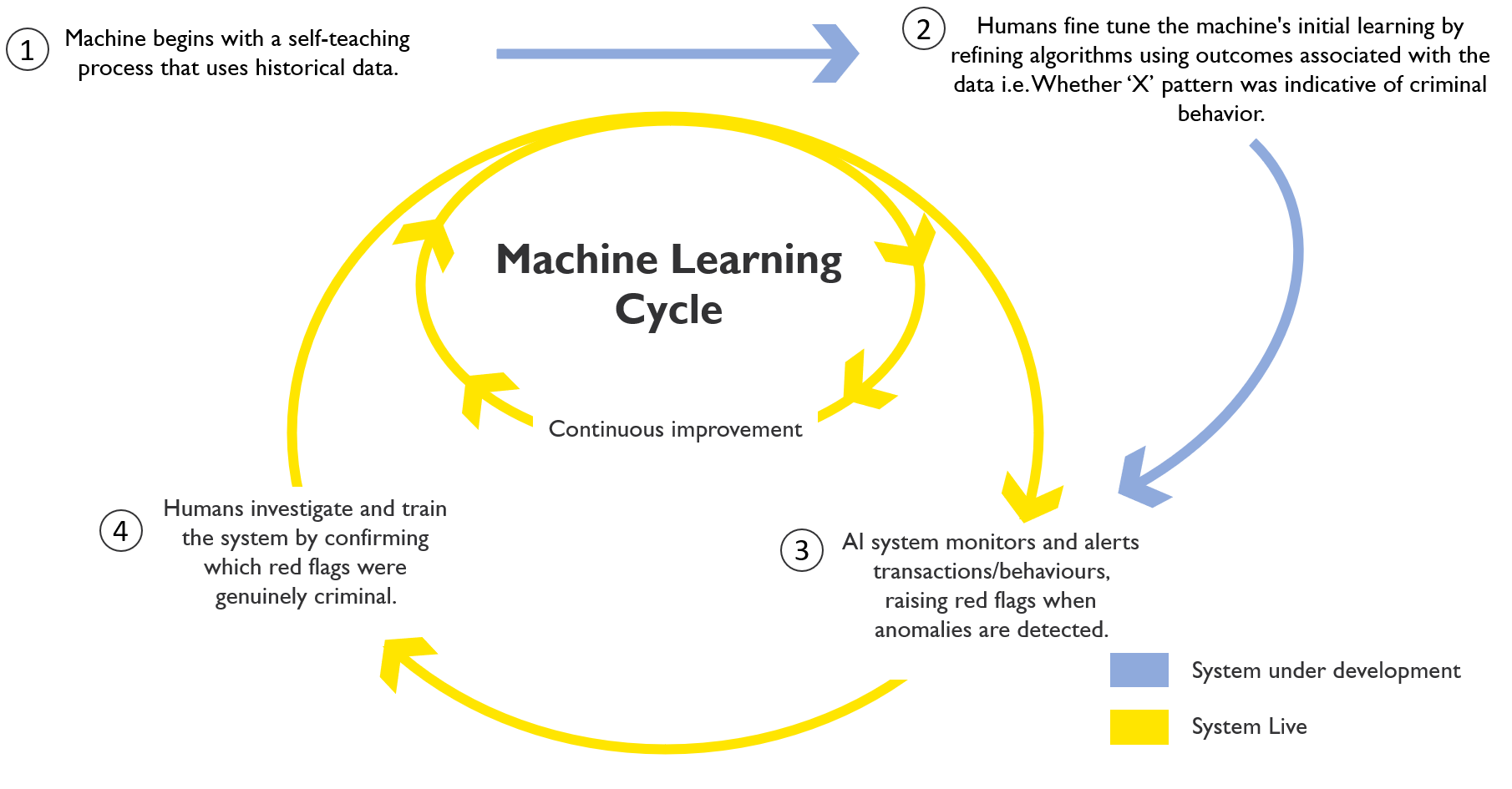

The machine learning cycle

Before a system that uses machine learning can be fully deployed, it will first need human input to fine tune its initial ‘self-taught’ learning (see image below).

This human input will allow the machine to learn what anomalous behaviour looks like, and in turn correctly identify when it occurs. Machines need to learn from low risk/normal behaviours and high risk/abnormal behaviours.

Did the system correctly identify criminal activity (a true hit)? Or did the transaction pattern transpire to be normal behaviour (a false positive)?

The biggest challenge here is that learning isn’t a tick and forget exercise. Criminals are constantly changing their tactics in an attempt to remain under the radar. Covid-19 is causing significant changes to the money laundering and financial crime environment right now.

For these reasons, most of a machine’s learning will occur when the system is live, as a result of humans reviewing the alerts generated by the system.

When analysts feedback their knowledge into the machine, the machine is able to improve the accuracy and relevance of its alerts to reduce false positives and help avoid false negatives.

Machine monitoring needs to be investigated

Aside from training the machine to accurately identify suspicious behaviour, analysts will also need to use their skills and experience to understand and act accordingly upon the data.

The nature of job roles will therefore increasingly change from mundane data processing to high risk investigative work.

4. Risks need to be managed with care

The introduction and use of AI and machine learning for compliance carries several risks that need to be carefully managed.

A 2019 study into almost 300 financial institutions by the Bank of England and FCA showed that respondents recognise a range of risks may arise from the use of machine learning. Crucially however, they also felt aware of how to address them.

“Firms do not think the use of machine learning necessarily generates new risks. Rather, they consider it as a potential amplifier of existing risks.”

FCA/BoE, 2019

Specifically, the survey highlights the following risks:

- Machine learning models are increasingly large and complex, and therefore demand appropriate risk management and controls processes.

- A lack of machine learning model explainability means how the machine works cannot always be easily validated, controlled and governed.

- Machine performance may be affected in scenarios where it has not previously acquired intelligence or where human experience, knowledge and judgement is required.

- Staff may not be sufficiently trained to use the system, and understand and address risks.

- Issues with data quality and algorithms, including biased data, can produce unintended results, inaccurate predictions and poor decisions.

The study highlights there are several ways to mitigate these risks, including model-validation techniques, appropriate staff training and the application of a data quality validation framework. There are also several safeguards that can be implemented, such as alert systems.

As I explained in point 2, the importance of data quality cannot be overemphasised: the intelligence you get out of machine learning can only be as good as the data that goes in.

An approach to manage the above types of risk is to use open source models and techniques so that algorithms and approaches are auditable. It is also good practice to use the latest machine learning explainability frameworks to provide additional clarity on the output of the models.

5. You will need to crawl before you walk, and walk before you run

If there’s one theme that keeps cropping up, it’s that you need to get the basics right before you implement AI.

This process can take a significant amount of time and energy – but it will ensure you maximise the benefits of machine learning and in turn, provide a difficult to replicate competitive advantage.

While it may be possible to largely skip crawling, and even walking, if you jump right in and try to run, you are most likely to stumble.

Aside from organising your data and training staff on how to use the system, much of the preparation for AI and machine learning rests in perfecting your transaction monitoring rules. It’s really important to get your rules right because AI should initially complement rather than replace traditional systems.

Running your rules-based AML system alongside a new AI-powered system allows the old system to assist in the training of the new. It will also enable you to challenge the traditional system, so you can concurrently improve the performance of both.

Final thoughts

If you’re ready to embed AI into your AML compliance processes, with the right focus and commitment it’s possible to transform how your team works and the results they can achieve.

However, with that vision in place, it is essential to get the basics right first: good data, robust rules, comprehensive staff training and careful risk management. The road to full AI deployment will be exciting but will certainly require buy-in and leadership from the top.

As time progresses, we will see the vast majority of regulated organisations adopt AI for AML compliance. It’s not a case of ‘if’, but ‘when’.

Crucially, when we are armed with immense power of machine learning, compliance teams can focus their time and efforts on high-value investigative activities to effectively identify money laundering and other financial crimes.

Request a demo

If you would like to learn more on how our AI can vastly improve your AML compliance processes, please do not hesitate to contact us to speak with an expert or request a demo of our cutting-edge systems.

.svg)